Uncovering a Four Year Old Bug

Apr 19, 2021 | samczsun

Every adventure requires a first step - The Cheshire Cat

What does it take to find a bug? What about one in a contract that's survived the test of time? The journey to a critical vulnerability isn't always straightforward and might begin when you least expect it.

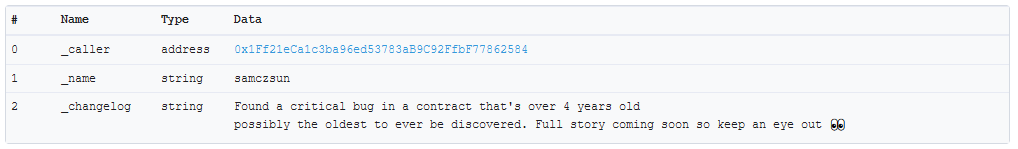

Found a critical bug in a contract that's over 4 years old, possibly the oldest to ever be discovered. Full story coming soon so keep an eye out 👀

— samczsun (@samczsun) April 5, 2021

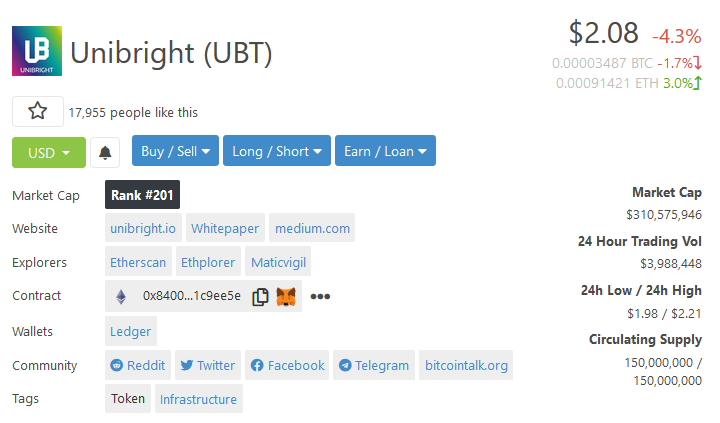

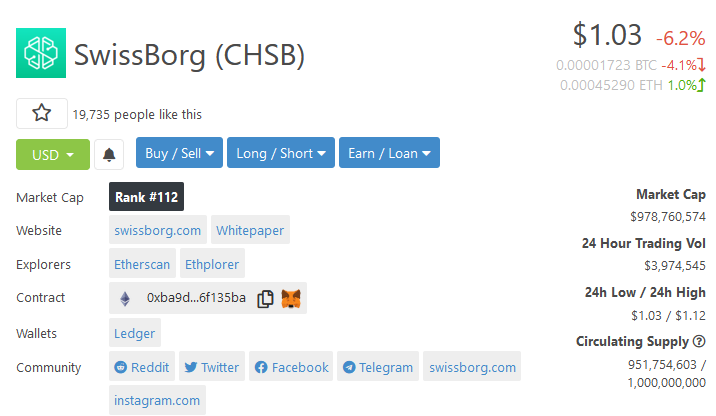

A few weeks ago I tweeted about a critical bug I had found. The bug affected contracts that are over four years old and now manage over a billion dollars in assets. Today, I'll tell you the story of how it happened.

The White Rabbit

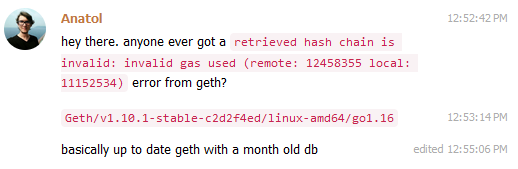

It was just after lunch when I got a push notification from one of the Ethereum security group chats.

This immediately caught my attention for two reasons:

- Most users of go-ethereum (geth) will normally never run into an error, because the software is written to be extremely resilient

- This error was occurring on the latest version of geth, meaning it had all the newest security patches

Having just found a bug in geth myself recently, I couldn't help wonder if there was an ongoing attack.

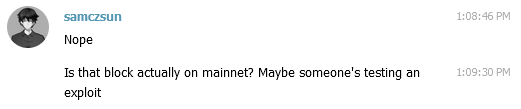

Anatol confirmed that the block was indeed on mainnet and shared his log file to help with debugging.

########## BAD BLOCK #########

Chain config: {ChainID: 1 Homestead: 1150000 DAO: 1920000 DAOSupport: true EIP150: 2463000 EIP155: 2675000 EIP158: 2675000 Byzantium: 4370000 Constantinople: 7280000 Petersburg: 7280000 Istanbul: 9069000, Muir Glacier: 9200000, YOLO v2: <nil>, Engine: ethash}

Number: 11805072

Hash: 0x0b3d0081b32c2159e2b77e5f2c798b737d0ae1a822669614c989377947c71ada

0: cumulative: 123312 gas: 123312 contract: 0x0000000000000000000000000000000000000000 status: 1 tx: 0x9bdf8792e0d6b62a68b9dbd849d60ca78ae6512d9a838d575d4f51d36a7ae095 logs: [0xc12c5d6000 0xc12c5d60b0 0xc12c5d6160 0xc12c5d6210 0xc12c5d62c0] bloom: 00200000000000040000000080000000000000000000001000010001000000000000000000000000000000000000000002000000080800000000000000000000000000000000000000000008000000200000000000000000000000008000010000000000000000000000000000000010000000000000000000000010000000000000000000000000004000000000000000000001000000080000004000000000000000000000000000000000000000000000000000000000000000000000000000008002000082000000000040000000000000000000001002000000000020000000200000000000000000000000000000000000000000400080000000000000 state:

[snip]

203: cumulative: 11152534 gas: 45752 contract: 0x0000000000000000000000000000000000000000 status: 1 tx: 0x63fe497529605f75abdaa5b026ef5e6d878fca827a7ee2a3168d9c4f0347baef logs: [0xc127c05970] bloom: 00000000000000000000000000000000000000000800000000010000000000000000000000000000000000000000000000000800000000000000000000200000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000005000000000000000000000000000000000000000000000000000004000000000000000000000000000000000000000000000020000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000020000034000000000000000000000000000000000000000000000000000000000000 state:

Error: invalid gas used (remote: 12458355 local: 11152534)

##############################

This error says that the block header claimed that all of the transactions used a total of 12458355 gas, but when the node executed all of the transactions it only used 11152534 gas instead. I first double-checked that my own node knew of the suspicious block.

$ seth block 11805072 hash

0x0b3d0081b32c2159e2b77e5f2c798b737d0ae1a822669614c989377947c71ada

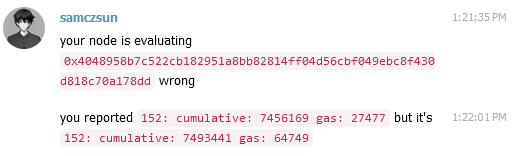

Given that the block hash matched, I knew that this wasn't an exploit but rather some sort of error with Anatol's node. The error log tells us the gas used by each transaction, so I quickly wrote a script to check each transaction in the block and compare the actual gas used with what Anatol's node reported. This let me pinpoint the offending transaction.

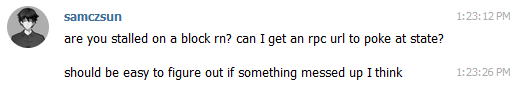

I knew that there must be some difference in state between Anatol's node and mainnet, so I DMed him for RPC access so I can inspect the contracts that the offending transaction interacted with.

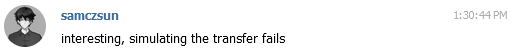

The transaction in question was a simple ERC20 transfer, so I quickly simulated it on Anatol's node, which failed.

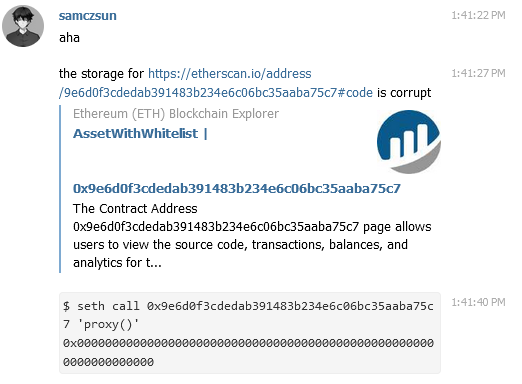

I quickly confirmed that the sending account contained enough tokens so all that was left was to comb through each sub-call to see where the gas usage discrepancy came from. After wrangling with seth for a bit, I found the root cause.

This contract contains an address in storage slot 0 on mainnet but no address on Anatol's node, a clear indication that there was some storage corruption. Anatol revealed that he actually restored the geth database from a backup, so most likely something got lost along the way and the node couldn't read the storage entry for that specific contract. Given that it'd be practically impossible to figure out what else was corrupted, I recommend he give snap sync a shot to quickly get his node back up and running.

Down the Rabbit Hole

Now that the immediate mystery was solved, I turned my attention to the corrupted contract. While debugging the transaction, I recognized the contract as an EToken2-issued token. I had reviewed that particular platform a long time ago, but that was before I gained all the experience I have now. Sometimes all it takes was a fresh approach and I was feeling confident that things would be different this time.

I started by defining my goals. Since this was an ERC20 contract, any way to get free tokens (either through stealing them from others or minting them myself) would have the highest impact. Next would be any form of griefing such as burning tokens that I don't own or making it impossible to transfer tokens.

Now I could start reviewing. The token contract itself delegates the ERC20 functions such as transfer to an implementation contract, but interestingly the implementation may vary per-user. Essentially, the token contains a default implementation (the latest version) which can be upgraded at any time by the owner. If a user doesn't agree with the upgrade, they can choose to opt-out and fix their specific implementation to the current version instead of being dragged along to the new implementation. This was an extremely interesting mechanic but I quickly confirmed that there was no way for me to set a custom implementation for myself, so I moved on.

Next up was the default implementation contract. Because the token wasn't using delegatecall but rather call, it needed to tell the implementation who the original caller was. If the implementation wasn't correctly authenticating the proxy, then it would be possible to spoof a transfer. Unfortunately, a quick check revealed that the implementation contract was secure as well.

After one more hop through the main token contract I finally arrived at the EToken2 platform contract, which is where all of the ERC20 logic is truly implemented. If the previous contracts were the appetizers, then this would be the main course, and I was excited to dig in.

Cake and a Drink

Earlier I had noticed that in order to be ERC20-compliant, each token contained two functions which emitted Transfer and Approval functions which could only be called by the EToken2 contract. It would be extremely interesting if I could somehow trick the EToken2 contract into emitting fake events, so I focused on this first.

There was only one place in the EToken2 contract which triggered a token to emit the event, and that was in _proxyTransferEvent.

function _proxyTransferEvent(uint _fromId, uint _toId, uint _value, bytes32 _symbol) internal {

if (proxies[_symbol] != 0x0) {

// Internal Out Of Gas/Throw: revert this transaction too;

// Recursive Call: safe, all changes already made.

Proxy(proxies[_symbol]).emitTransfer(_address(_fromId), _address(_toId), _value);

}

}

Interestingly though, the function didn't take the proxy directly but the symbol indirectly. This was a classic example of an indirection attack because if I could change the value of proxies[_symbol] then I would be able to trigger a Transfer event on any target I wanted.

There were three calls to _proxyTransferEvent: minting would trigger an event from address(0) to msg.sender, burning would trigger an event from from to address(0), and transferring would trigger an event from from to to. The first two were not particularly useful because I could only control at most one of the two parameters, but the last was interesting because I could potentially trigger a Transfer event from an arbitrary address to an arbitrary address.

Unfortunately, here I ran into a problem. The only way to reach the _transfer function was through the proxyTransferFromWithReference function, and that function would only allow the address specified in proxies to trigger a transfer for the specific symbol. This meant that if I owned a token and updated my proxy to the proxy for another token, I would no longer be able to trigger the transfer to cause the exploit.

It seemed like this potential exploit was dead, but I was reluctant to give up on it so I scrolled around some more. It was then that I really looked closer at the function signature for _transfer.

function _transfer(

uint _fromId,

uint _toId,

uint _value,

bytes32 _symbol,

string _reference,

uint _senderId

) internal checkSigned(_senderId, 1) returns (bool);

I had completely dismissed the checkSigned modifier because it was obviously a no-op in the default configuration. If it was significant, then users would have to submit some sort of signature with each transfer and that was obviously not happening. Now, with nowhere else to turn, I decided to look into what exactly checkSigned was doing.

function _checkSigned(Cosigner _cosigner, uint _holderId, uint _required) internal returns(bool) {

return _cosigner.consumeOperation(sha3(msg.data, _holderId), _required);

}

modifier checkSigned(uint _holderId, uint _required) {

if (!isCosignerSet(_holderId) || _checkSigned(holders[_holderId].cosigner, _holderId, _required)) {

_;

} else {

_error('Cosigner: access denied');

}

}

Incredible! It turns out that checkSigned actually performed an external call if a Cosigner is set for a user. This would explain why I never saw any users providing any signatures for a simple transfer. At the same time, this security feature was exactly what I needed to finalize my exploit.

I've talked about how reentrancy is really just a subset of what I've dubbed unsafe external calls, because the real risk is in the fact that control flow is handed to a third-party contract which can then go and do whatever it wants. The attacker could choose to reenter through the same function, but they could just as well do anything else, and this is a great example of that.

The problem I was facing was that I needed proxies[symbol] to be my own proxy before the transfer call, but a different value after I had begun the transfer process. Now with the cosigner functionality, I could set a custom cosigner which would swap my symbol's proxy when it receives a call to consumeOperation. This call would happen after the call to proxyTransferFromWithReference but before the call to _proxyTransferEvent, allowing me to trigger an arbitrary event on any EToken2-issued token!

Pepper

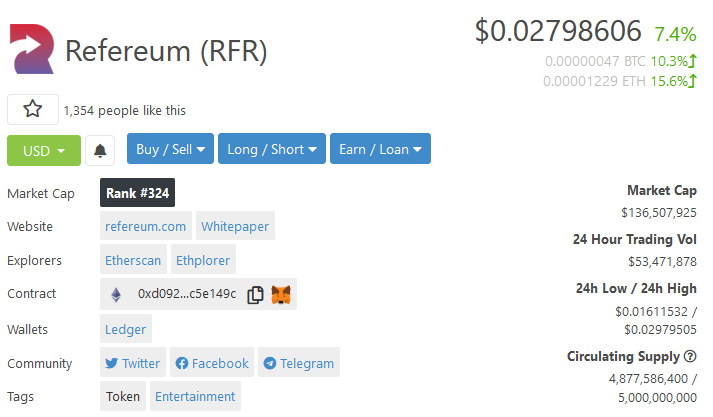

This was a good start, but the impact was not quite what I was looking for. This was because EToken2 is a whitelisted platform and only a centralized authority could issue new tokens. If I were an attacker and I wanted to pull off this attack, I would have to sign up and probably go through KYC/AML, which is obviously not ideal. It was also unclear who exactly might be affected by this bug, and I couldn't really go and test it for myself.

Undeterred, I stowed this finding away and kept looking for something else. While scanning through the contract, I noticed some interesting logic for transferring access from one user to another.

function grantAccess(address _from, address _to) returns(bool) {

if (!isCosignerSet(getHolderId(_from))) {

_error('Cosigner not set');

return false;

}

return _grantAccess(getHolderId(_from), _to);

}

function _grantAccess(uint _fromId, address _to) internal checkSigned(_fromId, 2) returns(bool) {

// Should recover to previously unused address.

if (getHolderId(_to) != 0) {

_error('Should recover to new address');

return false;

}

// We take current holder address because it might not equal _from.

// It is possible to recover from any old holder address, but event should have the current one.

address from = holders[_fromId].addr;

holders[_fromId].addr = _to;

holderIndex[_to] = _fromId;

// Internal Out Of Gas/Throw: revert this transaction too;

// Recursive Call: safe, all changes already made.

eventsHistory.emitRecovery(from, _to, msg.sender);

return true;

}

As it happens, I was so engrossed in figuring out how exactly to swap around the proxy that I didn't pay attention to what was actually going on in the contract. Notice that in the signature for _transfer, the from and to values are actually uint, not address. The EToken2 contract maintains a mapping of users (address) to holder id (uint) and this was the logic for granting your holder id to another address.

The moment I realized this, I started studying this function in earnest. At a high level, this function essentially transferred ownership of some data from one entity to another, and one common mistake to make when implementing this sort of internal ownership transfer is to update the receiver correctly but forget to invalidate the sender. If the receiver isn't updated correctly, any sort of testing will instantly reveal that there's a problem. If the sender isn't invalidated correctly, simple tests might not notice anything wrong.

Looking closer at the implementation of _grantAccess, I saw that it was intentionally designed such that it's possible to determine the previous address that owned a specific holder id. This was intended to allow a user who accidentally granted access to the wrong wallet to still maintain ownership, but intuitively I knew something was off here.

Even still, I couldn't quite figure out what that was at first glance. After all, if an attacker were to grant access to their holder id to another user, that's just putting their own funds at risk. Why would anyone want to do that?

After stewing on it for a little bit, I realized that I was looking at it in the wrong way. The key was that while the other user would have full ownership over "my" holder id, I would also have full ownership over "their" holder id. In other words, I would be able to backdoor any new user of the EToken2 platform and gain full access to their account, which translates to control over any EToken2-issued token they owned.

It was a quick step from there to figure out how to weaponize this exploit. I needed to grant access to a user before they first use the EToken2 platform, but I can't just go around granting access to random addresses. The solution was clear - I could just scan the mempool for transactions which would result in a user becoming registered with the EToken2 platform, and then frontrun any transactions I find. What's more, because of EToken2's architecture, I wouldn't just be attacking a single token but multiple tokens with multi-million dollar market caps.

At this point, I knew I had found what I was looking for, and it was time to contact the project.

52

Finding the bug was one thing, but fixing it was another. EToken2 had been designed to be non-upgradable, but forcing every single token issued on top of EToken2 to migrate their data to a new contract would be unduly burdensome. Oleksii, fellow white hat and also the head architect at Ambisafe, agreed. We had to find another way.

There weren't many things in _grantAccess that could revert. In fact, there was only one. The very last line in the function logged an event using the eventHistory contract. If this call reverted, then so would the call to _grantAccess and it would be impossible for anyone to backdoor another account. However, the EventsHistory contract doesn't allow administrators to redefine the handler for an event.

function addVersion(address _caller, string _name, string _changelog) noValue() checkAccess("admin") returns(bool) {

if (versions[_caller] != 0) {

return false;

}

if (bytes(_name).length == 0) {

return false;

}

if (bytes(_changelog).length == 0) {

return false;

}

uint version = ++latestVersion;

versions[_caller] = version;

versionInfo[version] = VersionInfo(block.number, msg.sender, _caller, _name, _changelog);

return true;

}

function () noValue() {

if (versions[msg.sender] == 0) {

return;

}

// Internal Out Of Gas/Throw: revert this transaction too;

// Call Stack Depth Limit reached: revert this transaction too;

// Recursive Call: safe, all changes already made.

if (!emitters[msg.sig].delegatecall(msg.data)) {

throw;

}

}

It seemed like we were at a dead end, but we quickly realized the situation wasn't nearly as bad as it appeared. Although the EventsHistory contract was intended to be immutable, it too had a bug which could be "exploited" by the admins.

Each emitter was handled using a delegatecall, which causes the code to be executed with the current contract's context. If we wanted to perform an upgrade on the EventsHistory contract, we could just register a fake emitter which would perform the upgrades we wanted by directly writing to storage.

Oleksii quickly wrote a few contracts to patch up the vulnerabilities I had found. The first would replace the handler for the emitRecovery function and would require that any addresses being granted access had explicitly opted in to being granted access. The second would replace the handler for all ERC20-related events and would require that the proxy contract for a given symbol was the expected proxy. The third would perform the upgrade on the EventsHistory contract by replacing the handlers and then forever disable the ability to register new emitters, making the contract truly immutable.

On April 6th, a mere 4 days after I had taken that first step, the upgrades were deployed and this saga was forever immortalized on the blockchain.

Acknowledgments: Special thanks to Anatol for the very special role he played in this and Oleksii for sacrificing his weekend in order to resolve this with me.

Disclaimer: This post is for general information purposes only. It does not constitute investment advice or a recommendation or solicitation to buy or sell any investment and should not be used in the evaluation of the merits of making any investment decision. It should not be relied upon for accounting, legal or tax advice or investment recommendations. This post reflects the current opinions of the authors and is not made on behalf of Paradigm or its affiliates and does not necessarily reflect the opinions of Paradigm, its affiliates or individuals associated with Paradigm. The opinions reflected herein are subject to change without being updated.